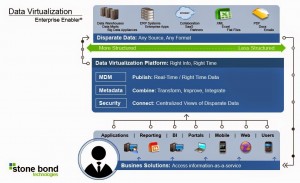

I’m a datum, tra-la, tra-la, just humming along, happier than most of my colleagues, now that Data Virtualization has come to my life.  I’m what they call a Master data, or rather a source of record, which has been quite a challenge, since I used to get cloned over and over, morphed, and turned upside down. That took lots of time and seemed to be necessary because they couldn’t quite get to ME, the original: unvarnished, and clean as a whistle. My colleagues still deal with this and are copied to staging databases all the time, leaving many places where versions reside. There is a constant complex of synchronization and updates across all the databases. Just imagine all the time to build all the comings and goings! Often it’s not even clear what the original, real value is or where it all began. Of course, this is done through such a gallimaufry of tools and custom coding that the information they convey is old by the time it is used. And everyone knows the greater the gallimaufry rating, the higher the tech debt accumulation. Bad stuff.

With this new Data Virtualization approach, I get to stay right where I am, and whenever called upon, I send a fresh virtual ME instantly. I say “virtual†because there’s no copy made of me, only a ghost of me is transmitted, aligned with other data, usually to a browser or to feed some analytics algorithm. Here’s where my psychiatrist has to get involved, because I have this existential dilemma. Does the ghost that’s passed forward actually exist? Does a datum exist if it’s passed virtually? Well, at least it can’t be stolen like copies of data can. I stay up nights worrying about my colleagues who have to be physically copied, or moved altogether, sometimes getting a little beat up, to some cloud in order to be used by a SaaS application. I never have to move because my ghost is passed directly to the cloud when it’s needed, never taking residence there. And one of the really cool things is that if a user of the browser decides that my value needs to be updated, they change it in the browser and send the ghost back to me with the new value, assuming they have permissions to do that.

Sometimes when I’m needed virtually, there are too many calls for ghosts, and the phantom of the opera sings one note too high and crashes my host software system. Fortunately, the masters at Stone Bond, with Enterprise Enabler®, not only have the speediest development environment on the planet, they also have a way of caching my ghost along with others in memory since I really don’t change that often. In the background, the cache gathers fresh ghosts whenever needed. When my call comes in, my ghost flies from the cache of ghosts and finishes the journey, combined with some other ghosts coming live from other systems. The phantom just hums along and the host system lives happily ever after.

Do you want to have more information? Ask for the whitepaper “Creating an Agile Data Integration Platform using Data Virtualizationâ€, www.quant-ict.nl, glenda@quant-ict.nl, tel:+31 880882500

Source Stonebond Enterprise, Pamela Zsabo

Quant ICT Group wishes you happy holidays and a prosperous 2017…..